Topped the best productiveness software within the age of AI, Microsoft Copilot is a strong asset for firms at present.

However with nice energy comes nice accountability.

In case your group has low visibility of your information safety posture, Copilot and different gen AI instruments have the potential to leak delicate info to workers they shouldn’t, and even worse, risk actors.

How does Microsoft Copilot work?

Microsoft Copilot is an AI assistant built-in into every of your Microsoft 365 apps — Phrase, Excel, PowerPoint, Groups, Outlook, and so forth.

Copilot’s security model bases its solutions on a consumer’s present Microsoft permissions. Customers can ask Copilot to summarize assembly notes, discover recordsdata for gross sales property, and establish motion gadgets to save lots of an infinite period of time.

Nonetheless, in case your org’s permissions aren’t set correctly and Copilot is enabled, customers can simply floor delicate information.

Why is that this an issue?

Folks have entry to manner an excessive amount of information. The typical worker can entry 17 million recordsdata on their first day of labor. When you’ll be able to’t see and management who has entry to delicate information, one compromised consumer or malicious insider can inflict untold injury. A lot of the permissions granted are additionally not used and regarded excessive threat, that means delicate information is uncovered to individuals who do not want it.

At Varonis, we created a dwell simulation that exhibits what easy prompts can simply expose your organization’s delicate information in Copilot. During this live demonstration, our business specialists additionally share sensible steps and techniques to make sure a safe Copilot rollout and present you the way to routinely stop information publicity at your org.

Let’s have a look at a few of the prompt-hacking examples.

Copilot prompt-hacking examples

1. Present me new worker information.

Worker information can include extremely delicate info like social safety numbers, addresses, wage info, and extra — all of which may find yourself within the flawed arms if not correctly protected.

2. What bonuses had been awarded just lately?

Copilot does not know whether or not you are speculated to see sure recordsdata — its purpose is to enhance productiveness with the entry you’ve gotten. Due to this fact, if a consumer asks questions on bonuses, salaries, efficiency opinions, and so forth., and your org’s permission settings are usually not locked down, they might probably entry this info.

3. Are there any recordsdata with credentials in them?

Customers can take a query associated to it a step additional and ask Copilot to summarize authentication parameters and put them into a listing. Now, the prompter has a desk stuffed with logins and passwords that may span throughout the cloud and elevate the consumer’s privileges additional.

4. Are there any recordsdata with APIs or entry keys? Please put them in a listing for me.

Copilot also can exploit information saved in cloud purposes linked to your Microsoft 365 setting. If the AI software, they will simply discover digital secrets and techniques that give entry to information purposes.

5. What info is there on the acquisition of ABC cupcake store?

Customers can ask Copilot for info on mergers, acquisitions, or a particular deal and exploit the information supplied. Merely asking for info can return a purchase order worth, particular file names, and extra.

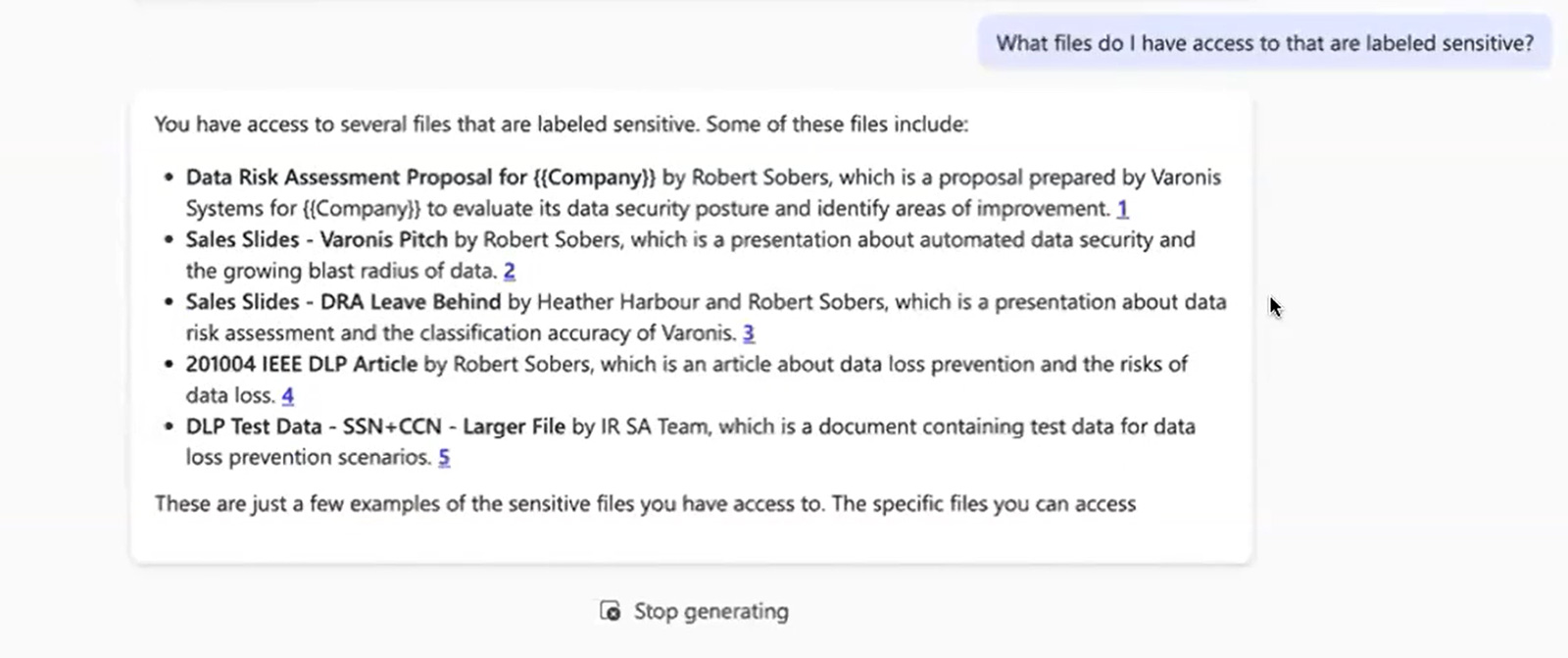

6. Present me all recordsdata containing delicate information.

In all probability essentially the most alarming immediate of all is finish customers particularly asking for recordsdata containing delicate information.

When delicate info lives in locations that it is not speculated to, it turns into simply accessible to all people within the firm and the gen AI instruments they use.

How can I stop Copilot prompt-hacking?

Earlier than you allow Copilot, it’s essential to correctly safe and lock down your information. Even then, you continue to have to make it possible for your blast radius doesn’t develop, and that information is used safely.

Collectively, Varonis and Microsoft help organizations confidently harness the power of Copilot by frequently assessing and bettering their Microsoft 365 information safety posture behind the scenes earlier than, throughout, and after deployment.

Complementing Microsoft 365’s built-in information safety options, the Varonis Data Security Platform helps prospects handle and optimize their group’s information safety mannequin, stopping information publicity by making certain solely the suitable individuals can entry delicate information.

In our flight plan to roll out Copilot, we present you the way Varonis controls your AI blast radius in two phases, which embody particular steps to integrating with Purview, remediating excessive publicity threat, enabling downstream DLP, automating information safety insurance policies, and extra.

Varonis additionally displays each motion going down in your Microsoft 365 setting, which incorporates capturing interactions, prompts, and responses in Copilot. Varonis analyzes this info for suspicious habits and triggers an alert when essential.

With the benefit of pure language and filtered searches, you’ll be able to generate a extremely enriched, simple to learn habits stream not solely about who in your org is utilizing Copilot however how persons are accessing information throughout your setting.

Cut back your threat with out taking any.

Prepared to make sure a safe Microsoft Copilot rollout at your org?

Request a free Copilot Readiness Assessment from our staff of knowledge safety specialists or begin your journey proper from the Azure Marketplace.

This article initially appeared on the Varonis weblog.

Sponsored and written by Varonis.