Documentation abounds for any matter or questions you might need, however once you attempt to apply one thing to your individual makes use of, it all of the sudden turns into exhausting to seek out what you want. This drawback would not solely exist for you.

On this weblog publish, we’ll take a look at how LangChain implements RAG in an effort to apply the identical rules to any utility with LangChain and an LLM.

What Is RAG?

This time period is used lots in as we speak’s technical panorama, however what does it really imply? Listed here are a number of definitions from numerous sources:

- “Retrieval-Augmented Era (RAG) is the method of optimizing the output of a big language mannequin, so it references an authoritative data base exterior of its coaching information sources earlier than producing a response.” — Amazon Web Services

- “Retrieval-augmented era (RAG) is a way for enhancing the accuracy and reliability of generative AI fashions with info fetched from exterior sources.” — NVIDIA

- “Retrieval-augmented era (RAG) is an AI framework for bettering the standard of LLM-generated responses by grounding the mannequin on exterior sources of data to complement the LLM’s inside illustration of knowledge.” — IBM Research

On this weblog publish, we’ll be specializing in tips on how to write the retrieval question that dietary supplements or grounds the LLM’s reply. We are going to use Python with LangChain, a framework used to put in writing generative AI purposes that work together with LLMs.

The Knowledge Set

First, let’s take a fast take a look at our information set. We’ll be working with the SEC (Securities and Exchange Commission) filings from the EDGAR (Electronic Data Gathering, Analysis, and Retrieval system) database. The SEC filings are a treasure trove of knowledge, containing monetary statements, disclosures, and different vital details about publicly-traded firms.

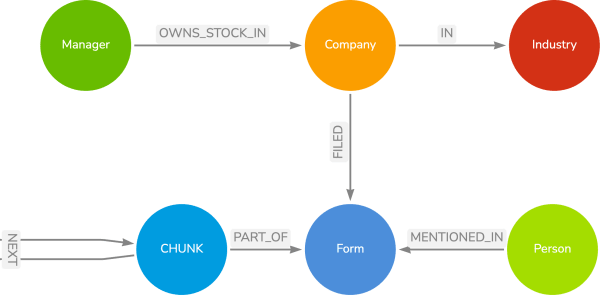

The info comprises firms who’ve filed monetary varieties (10k, 13, and so on.) with the SEC. Totally different managers personal inventory in these firms, and the businesses are a part of totally different industries. Within the monetary varieties themselves, numerous individuals are talked about within the textual content, and we now have damaged the textual content down into smaller chunks for the vector search queries to deal with. We’ve got taken every chunk of textual content in a type and created a vector embedding that can also be saved on the CHUNK node. Once we run a vector search question, we’ll evaluate the vector of the question to the vector of the CHUNK nodes to seek out essentially the most related textual content.

Let’s have a look at tips on how to assemble our question!

Retrieval Question Examples

I used a number of sources to assist me perceive tips on how to write a retrieval question in LangChain. The primary was a weblog publish by Tomaz Bratanic, who wrote a publish on tips on how to work with the Neo4j vector index in LangChain utilizing Wikipedia article information. The second was a query from the GenAI Stack, which is a group of demo purposes constructed with Docker and makes use of the StackOverflow information set containing technical questions and solutions.

Each queries are included under.

# Tomaz's weblog publish retrieval question

retrieval_query = """

OPTIONAL MATCH (node)<-[:EDITED_BY]-(p)

WITH node, rating, accumulate(p) AS editors

RETURN node.data AS textual content,

rating,

node {.*, vector: Null, data: Null, editors: editors} AS metadata

"""

# GenAI Stack retrieval question

retrieval_query="""

WITH node AS query, rating AS similarity

CALL str +

'n### Reply (Accepted: '+ reply.is_accepted +

' Rating: ' + reply.rating+ '): '+ reply.physique + 'n') as answerTexts

RETURN '##Query: ' + query.title + 'n' + query.physique + 'n'

+ answerTexts AS textual content, similarity as rating, {supply: query.hyperlink} AS metadata

ORDER BY similarity ASC // in order that greatest solutions are the final

"""Now, discover that these queries don’t look full. We would not begin a Cypher question with an OPTIONAL MATCH or WITH clause. It’s because the retrieval question is added on to the top of the vector search question. Tomaz’s publish exhibits us the implementation of the vector search question.

read_query = (

"CALL db.index.vector.queryNodes($index, $okay, $embedding) "

"YIELD node, rating "

) + retrieval_querySo LangChain first calls the db.index.vector.queryNodes() process (extra data in documentation) to seek out essentially the most related nodes and passes (YIELD) the same node and the similarity rating, after which it provides the retrieval question to the top of the vector search question to tug further context. That is very useful to know, particularly as we assemble the retrieval question, and for after we begin testing outcomes!

The second factor to notice is that each queries return the identical three variables: textual content, rating, and metadata. That is what LangChain expects, so you’ll get errors if these are usually not returned. The textual content variable comprises the associated textual content, the rating is the similarity rating for the chunk towards the search textual content, and the metadata can comprise any further info that we would like for context.

Establishing the Retrieval Question

Let’s construct our retrieval question! We all know the similarity search question will return the node and rating variables, so we are able to cross these into our retrieval question to tug linked information of these related nodes. We additionally need to return the textual content, rating, and metadata variables.

retrieval_query = """

WITH node AS doc, rating as similarity

# some extra question right here

RETURN <one thing> as textual content, similarity as rating,

{<one thing>: <one thing>} AS metadata

"""Okay, there’s our skeleton. Now what do we would like within the center? We all know our information mannequin will pull CHUNK nodes within the similarity search (these would be the node AS doc values in our WITH clause above). Chunks of textual content do not give loads of context, so we need to pull within the Type, Particular person, Firm, Supervisor, and Trade nodes which are linked to the CHUNK nodes. We additionally embrace a sequence of textual content chunks on the NEXT relationship, so we are able to pull the following and former chunks of textual content round an identical one. We additionally will pull all of the chunks with their similarity scores, and we need to slender that down a bit…possibly simply the highest 5 most related chunks.

retrieval_query = """

WITH node AS doc, rating as similarity

ORDER BY similarity DESC LIMIT 5

CALL { WITH doc

OPTIONAL MATCH (prevDoc:Chunk)-[:NEXT]->(doc)

OPTIONAL MATCH (doc)-[:NEXT]->(nextDoc:Chunk)

RETURN prevDoc, doc AS outcome, nextDoc

}

# some extra question right here

RETURN coalesce(prevDoc.textual content,'') + coalesce(doc.textual content,'') + coalesce(nextDoc.textual content,'') as textual content,

similarity as rating,

{<one thing>: <one thing>} AS metadata

"""Now we maintain the 5 most related chunks, then pull the earlier and subsequent chunks of textual content within the CALL {} subquery. We additionally change the RETURN to concatenate the textual content of the earlier, present, and subsequent chunks all into textual content variable. The coalesce() function is used to deal with null values, so if there isn’t any earlier or subsequent chunk, it would simply return an empty string.

Let’s add a bit extra context to tug within the different associated entities within the graph.

retrieval_query = """

WITH node AS doc, rating as similarity

ORDER BY similarity DESC LIMIT 5

CALL { WITH doc

OPTIONAL MATCH (prevDoc:Chunk)-[:NEXT]->(doc)

OPTIONAL MATCH (doc)-[:NEXT]->(nextDoc:Chunk)

RETURN prevDoc, doc AS outcome, nextDoc

}

WITH outcome, prevDoc, nextDoc, similarity

CALL {

WITH outcome

OPTIONAL MATCH (outcome)-[:PART_OF]->(:Type)<-[:FILED]-(firm:Firm), (firm)<-[:OWNS_STOCK_IN]-(supervisor:Supervisor)

WITH outcome, firm.title as companyName, apoc.textual content.be a part of(accumulate(supervisor.managerName),';') as managers

WHERE companyName IS NOT NULL OR managers > ""

WITH outcome, companyName, managers

ORDER BY outcome.rating DESC

RETURN outcome as doc, outcome.rating as reputation, companyName, managers

}

RETURN coalesce(prevDoc.textual content,'') + coalesce(doc.textual content,'') + coalesce(nextDoc.textual content,'') as textual content,

similarity as rating,

{documentId: coalesce(doc.chunkId,''), firm: coalesce(companyName,''), managers: coalesce(managers,''), supply: doc.supply} AS metadata

"""The second CALL {} subquery pulls in any associated Type, Firm, and Supervisor nodes (in the event that they exist, OPTIONAL MATCH). We accumulate the managers into an inventory and make sure the firm title and supervisor checklist are usually not null or empty. We then order the outcomes by a rating (would not at present present worth however might observe what number of occasions the doc has been retrieved).

Since solely the textual content, rating, and metadata properties get returned, we might want to map these additional values (documentId, firm, and managers) within the metadata dictionary area. This implies updating the ultimate RETURN assertion to incorporate these.

Wrapping Up!

On this publish, we checked out what RAG is and the way retrieval queries work in LangChain. We additionally checked out a number of examples of Cypher retrieval queries for Neo4j and constructed our personal. We used the SEC filings information set for our question and noticed tips on how to pull additional context and return it mapped to the three properties LangChain expects.

If you’re constructing or taken with extra Generative AI content material, take a look at the sources linked under. Joyful coding!