On this weblog, you’ll discover ways to implement Retrieval Augmented Technology (RAG) utilizing Weaviate, LangChain4j, and LocalAI. This implementation permits you to ask questions on your paperwork utilizing pure language. Get pleasure from!

1. Introduction

Within the previous post, Weaviate was used as a vector database with a view to carry out a semantic search. The supply paperwork used are two Wikipedia paperwork. The discography and list of songs recorded by Bruce Springsteen are the paperwork used. The attention-grabbing a part of these paperwork is that they include information and are primarily in a desk format. Elements of those paperwork are transformed to Markdown with a view to have a greater illustration. The Markdown information are embedded in Collections in Weaviate. The consequence was superb: all questions requested, resulted within the right reply to the query. That’s, the right section was returned. You continue to wanted to extract the reply your self, however this was fairly straightforward.

Nevertheless, can this be solved by offering the Weaviate search outcomes to an LLM (Massive Language Mannequin) by creating the precise immediate? Will the LLM be capable of extract the right solutions to the questions?

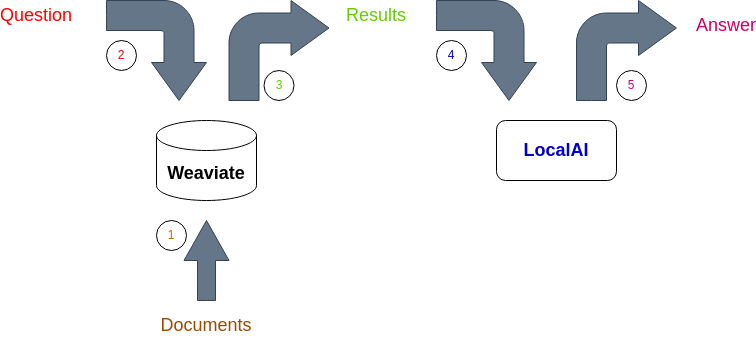

The setup is visualized within the graph beneath:

- The paperwork are embedded and saved in Weaviate;

- The query is embedded and a semantic search is carried out utilizing Weaviate;

- Weaviate returns the semantic search outcomes;

- The result’s added to a immediate and fed to LocalAI which runs an LLM utilizing LangChain4j;

- The LLM returns the reply to the query.

Weaviate additionally helps RAG, so why trouble utilizing LocalAI and LangChain4j? Sadly, Weaviate doesn’t help integration with LocalAI and only cloud LLMs can be utilized. In case your paperwork include delicate info or info you do not need to ship to a cloud-based LLM, it’s essential run an area LLM and this may be accomplished utilizing LocalAI and LangChain4j.

If you wish to run the examples on this weblog, it’s essential learn the previous blog.

The sources used on this weblog could be discovered on GitHub.

2. Stipulations

The stipulations for this weblog are:

- Fundamental data of embedding and vector shops;

- Fundamental Java data, Java 21 is used;

- Fundamental data of Docker;

- Fundamental data of LangChain4j;

- You want Weaviate and the paperwork should be embedded, see the previous blog on how to take action;

- You want LocalAI if you wish to run the examples, see a previous blog on how one can make use of LocalAI. Model 2.2.0 is used for this weblog.

- If you wish to be taught extra about RAG, learn this blog.

3. Create the Setup

Earlier than getting began, there may be some setup to do.

3.1 Setup LocalAI

LocalAI have to be working and configured. How to take action is defined within the weblog Running LLM’s Locally: A Step-by-Step Guide.

3.2 Setup Weaviate

Weaviate have to be began. The one distinction with the Weaviate weblog is that you’ll run it on port 8081 as a substitute of port 8080. It’s because LocalAI is already working on port 8080.

Begin the compose file from the basis of the repository.

$ docker compose -f docker/compose-embed-8081.yamlRun class EmbedMarkdown with a view to embed the paperwork (change the port to 8081!). Three collections are created:

- CompilationAlbum: a listing of all compilation albums of Bruce Springsteen;

- Track: a listing of all songs by Bruce Springsteen;

- StudioAlbum: a listing of all studio albums of Bruce Springsteen.

4. Implement RAG

4.1 Semantic Search

The primary a part of the implementation relies on the semantic search implementation of sophistication SearchCollectionNearText. It’s assumed right here, that you already know during which assortment (argument className) to seek for.

Within the previous post, you observed that strictly spoken, you do not want to know which assortment to seek for. Nevertheless, at this second, it makes the implementation a bit simpler and the consequence stays equivalent.

The code will take the query and with the assistance of NearTextArgument, the query shall be embedded. The GraphQL API of Weaviate is used to carry out the search.

personal static void askQuestion(String className, Area[] fields, String query, String extraInstruction) {

Config config = new Config("http", "localhost:8081");

WeaviateClient shopper = new WeaviateClient(config);

Area extra = Area.builder()

.identify("_additional")

.fields(Area.builder().identify("certainty").construct(), // solely supported if distance==cosine

Area.builder().identify("distance").construct() // all the time supported

).construct();

Area[] allFields = Arrays.copyOf(fields, fields.size + 1);

allFields[fields.length] = extra;

// Embed the query

NearTextArgument nearText = NearTextArgument.builder()

.ideas(new String[]{query})

.construct();

End result<GraphQLResponse> consequence = shopper.graphQL().get()

.withClassName(className)

.withFields(allFields)

.withNearText(nearText)

.withLimit(1)

.run();

if (consequence.hasErrors()) {

System.out.println(consequence.getError());

return;

}

...4.2 Create Immediate

The results of the semantic search must be fed to the LLM together with the query itself. A immediate is created which is able to instruct the LLM to reply the query utilizing the results of the semantic search. Additionally, the choice so as to add further directions is applied. In a while, you will note what to do with that.

personal static String createPrompt(String query, String inputData, String extraInstruction) {

return "Reply the next query: " + query + "n" +

extraInstruction + "n" +

"Use the next knowledge to reply the query: " + inputData;

}4.3 Use LLM

The very last thing to do is to feed the immediate to the LLM and print the query and reply to the console.

personal static void askQuestion(String className, Area[] fields, String query, String extraInstruction) {

...

ChatLanguageModel mannequin = LocalAiChatModel.builder()

.baseUrl("http://localhost:8080")

.modelName("lunademo")

.temperature(0.0)

.construct();

String reply = mannequin.generate(createPrompt(query, consequence.getResult().getData().toString(), extraInstruction));

System.out.println(query);

System.out.println(reply);

}4.4 Questions

The inquiries to be requested are the identical as within the earlier posts. They may invoke the code above.

public static void important(String[] args) {

askQuestion(Track.NAME, Track.getFields(), "on which album was "adam raised a cain" initially launched?", "");

askQuestion(StudioAlbum.NAME, StudioAlbum.getFields(), "what's the highest chart place of "Greetings from Asbury Park, N.J." within the US?", "");

askQuestion(CompilationAlbum.NAME, CompilationAlbum.getFields(), "what's the highest chart place of the album "tracks" in canada?", "");

askQuestion(Track.NAME, Track.getFields(), "during which 12 months was "Freeway Patrolman" launched?", "");

askQuestion(Track.NAME, Track.getFields(), "who produced "all or nothin' in any respect?"", "");

}The whole supply code could be considered here.

5. Outcomes

Run the code and the result’s the next:

- On which album was “Adam Raised a Cain” initially launched?

The album “Darkness on the Fringe of City” was initially launched in 1978, and the music “Adam Raised a Cain” was included on that album. - What’s the highest chart place of “Greetings from Asbury Park, N.J.” within the US?

The best chart place of “Greetings from Asbury Park, N.J.” within the US is 60. - What’s the highest chart place of the album “Tracks” in Canada?

Based mostly on the supplied knowledge, the best chart place of the album “Tracks” in Canada is -. It’s because the info doesn’t embrace any Canadian chart positions for this album. - Through which 12 months was “Freeway Patrolman” launched?

The music “Freeway Patrolman” was launched in 1982. - Who produced “all or nothin’ in any respect?”

The music “All or Nothin’ at All” was produced by Bruce Springsteen, Roy Bittan, Jon Landau, and Chuck Plotkin.

All solutions to the questions are right. An important job has been accomplished within the previous post, the place embedding the paperwork within the right manner, resulted find the right segments. An LLM is ready to extract the reply to the query when it’s fed with the right knowledge.

6. Caveats

Throughout the implementation, I bumped into some unusual habits which is sort of necessary to know when you find yourself beginning to implement your use case.

6.1 Format of Weaviate Outcomes

The Weaviate response comprises a GraphQLResponse object, one thing like the next:

GraphQLResponse(

knowledge={

Get={

Songs=[

{_additional={certainty=0.7534831166267395, distance=0.49303377},

originalRelease=Darkness on the Edge of Town,

producers=Jon Landau Bruce Springsteen Steven Van Zandt (assistant),

song="Adam Raised a Cain", writers=Bruce Springsteen, year=1978}

]

}

},

errors=null)Within the code, the info half is used so as to add to the immediate.

String reply = mannequin.generate(createPrompt(query, consequence.getResult().getData().toString(), extraInstruction));What occurs once you add the response as-is to the immediate?

String reply = mannequin.generate(createPrompt(query, consequence.getResult().toString(), extraInstruction));Working the code returns the next unsuitable reply for query 3 and a few pointless extra info for query 4. The opposite questions are answered accurately.

- What’s the highest chart place of the album “Tracks” in Canada?

Based mostly on the supplied knowledge, the best chart place of the album “Tracks” in Canada is 50. - Through which 12 months was “Freeway Patrolman” launched?

Based mostly on the supplied GraphQLResponse, “Freeway Patrolman” was launched in 1982.

who produced “all or nothin’ in any respect?”

6.2 Format of Immediate

The code comprises performance so as to add further directions to the immediate. As you may have most likely observed, this performance shouldn’t be used. Let’s see what occurs once you take away this from the immediate. The createPrompt methodology turns into the next (I didn’t take away the whole lot in order that solely a minor code change is required).

personal static String createPrompt(String query, String inputData, String extraInstruction) {

return "Reply the next query: " + query + "n" +

"Use the next knowledge to reply the query: " + inputData;

}Working the code provides some further info to the reply to query 3 which isn’t solely right. It’s right that the album has chart positions for the US, United Kingdom, Germany, and Sweden. It’s not right that the album reached the highest 10 within the UK and US charts. All different questions are answered accurately.

- What’s the highest chart place of the album “Tracks” in Canada?

Based mostly on the supplied knowledge, the best chart place of the album “Tracks” in Canada shouldn’t be specified. The info solely contains chart positions for different nations comparable to the US, United Kingdom, Germany, and Sweden. Nevertheless, the album did attain the highest 10 within the UK and US charts.

It stays a bit brittle when utilizing an LLM. You can’t all the time belief the reply it’s given. Altering the immediate accordingly appears to be doable to reduce the hallucinations of an LLM. It’s subsequently necessary that you just accumulate suggestions out of your customers with a view to establish when an LLM appears to hallucinate. This manner, it is possible for you to to enhance the responses to the customers. An attention-grabbing blog is written by Fiddler which addresses this sort of problem.

7. Conclusion

On this weblog, you discovered the best way to implement RAG utilizing Weaviate, LangChain4j, and LocalAI. The outcomes are fairly superb. Embedding paperwork the precise manner, filtering the outcomes, and feeding them to an LLM is a really highly effective mixture that can be utilized in lots of use instances.