Over the previous few years, Apache Kafka has emerged because the main commonplace for streaming information. Quick-forward to the current day: Kafka has achieved ubiquity, being adopted by no less than 80% of the Fortune 100. This widespread adoption is attributed to Kafka’s structure, which fits far past primary messaging. Kafka’s structure versatility makes it exceptionally appropriate for streaming information at an enormous “web” scale, making certain fault tolerance and information consistency essential for supporting mission-critical purposes.

Flink is a high-throughput, unified batch and stream processing engine, famend for its functionality to deal with steady information streams at scale. It seamlessly integrates with Kafka and provides sturdy assist for exactly-once semantics, making certain every occasion is processed exactly as soon as, even amidst system failures. Flink emerges as a pure alternative as a stream processor for Kafka. Whereas Apache Flink enjoys vital success and recognition as a device for real-time information processing, accessing ample assets and present examples for studying Flink may be difficult.

On this article, I’ll information you thru the step-by-step means of integrating Kafka 2.13-3.7.0 with Flink 1.18.1 to devour information from a subject and course of it inside Flink on the single-node cluster. Ubuntu-22.04 LTS has been used as an OS within the cluster.

Assumptions

- The system has a minimal of 8 GB RAM and 250 GB SSD together with Ubuntu-22.04.2 amd64 because the working system.

- OpenJDK 11 is put in with

JAVA_HOMEsetting variable configuration. - Python 3 or Python 2 together with Perl 5 is accessible on the system.

- Single-node Apache Kafka-3.7.0 cluster has been up and operating with Apache Zookeeper -3.5.6. (Please learn here how one can arrange a Kafka cluster.).

Set up and Begin Flink 1.18.1

- The binary distribution of Flink-1.18.1 may be downloaded here.

- Extract the archive flink-1.18.1-bin-scala_2.12.tgz on the terminal utilizing

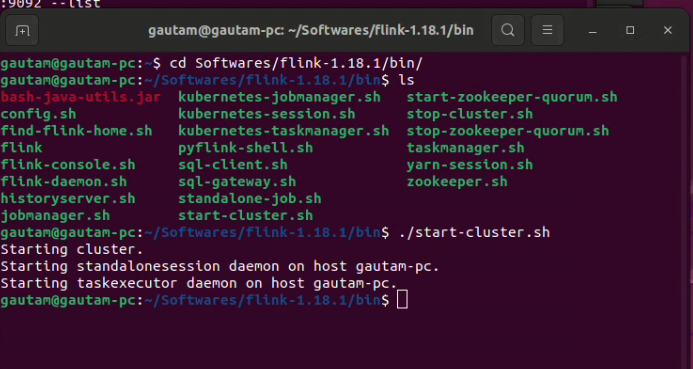

$ tar -xzf flink-1.18.1-bin-scala_2.12.tgz. After profitable extraction, listing flink-1.18.1 will likely be created. Please make it possible for inside itbin/,conf/, andexamples/directories can be found. - Navigate to the

binlisting by the terminal, and execute$ ./bin/start-cluster.shto begin the single-node Flink cluster.

- Furthermore, we are able to make the most of Flink’s internet UI to observe the standing of the cluster and operating jobs by accessing the browser at port 8081.

- The Flink cluster may be stopped by executing

$ ./bin/stop-cluster.sh.

Checklist of Dependent JARs

The next .jars needs to be included within the classpath/construct file:

I’ve created a primary Java program utilizing Eclipse IDE 23-12 to constantly devour messages inside Flink from a Kafka matter. Dummy string messages are being printed to the subject utilizing Kafka’s built-in kafka-console-publisher script. Upon arrival within the Flink engine, no information transformation happens for every message. As an alternative, an extra string is solely appended to every message and printed for verification, making certain that messages are constantly streamed to Flink.

bundle com.dataview.flink;

import org.apache.flink.api.widespread.eventtime.WatermarkStrategy;

import org.apache.flink.api.widespread.features.MapFunction;

import org.apache.flink.api.widespread.serialization.SimpleStringSchema;

import org.apache.flink.connector.kafka.supply.KafkaSource;

import org.apache.flink.connector.kafka.supply.enumerator.initializer.OffsetsInitializer;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.setting.StreamExecutionEnvironment;

import com.dataview.flink.util.IKafkaConstants;

public class readFromKafkaTopic {

public static void important(String[] args) throws Exception {

StreamExecutionEnvironment see = StreamExecutionEnvironment.getExecutionEnvironment();

KafkaSource<String> supply = KafkaSource.<String>builder()

.setBootstrapServers(IKafkaConstants.KAFKA_BROKERS)

.setTopics(IKafkaConstants.FIRST_TOPIC_NAME)

.setGroupId(IKafkaConstants.GROUP_ID_CONFIG)

.setStartingOffsets(OffsetsInitializer.earliest())

.setValueOnlyDeserializer(new SimpleStringSchema())

.construct();

DataStream<String> messageStream = see.fromSource(supply, WatermarkStrategy.noWatermarks(), "Kafka Supply");

messageStream.rebalance().map(new MapFunction<String, String>() {

personal static remaining lengthy serialVersionUID = -6867736771747690202L;

@Override

public String map(String worth) throws Exception {

return "Kafka and Flink says: " + worth;

}

}).print();

see.execute();

}

}

All the execution has been screen-recorded. If , you possibly can watch it under:<

I hope you loved studying this. Please keep tuned for one more upcoming article the place I’ll clarify how one can stream messages/information from Flink to a Kafka matter.